3 discrepancy problems with Google campaigns • Adobe Analytics

3 common problems with Google campaign reporting using Adobe Analytics. Why they happen and how we could improve reports quality. Introduction Adobe does not have official linking...

Filter by Category

Filter by Author

3 common problems with Google campaign reporting using Adobe Analytics. Why they happen and how we could improve reports quality.

Contents

Adobe does not have official linking with Google products, which would simplify everything. And yes, I know you can link, for example, Google Search 360, but this process is so complicated and requires sending so many emails between Adobe and Google, that I’d prefer not to go through this process again.

Even if Google has many discrepancy problems between their products, they are trying to follow some repeatable logic so you can more or less know what report discrepences to expect.

But trying to compare the same data between the same campaign Google and Adobe Analytics, it is an entirely different level.

Very often happens that I’m investigating problems why discrepences between page visits registered by Adobe Analytics and Google Floodlights are so random and unlogical.

For example, a day-to-day comparison of the user visits between Google and Adobe from a single campaign can reach 20-50%. And even splitting desktop and mobile does not come helpful.

Each company counts user visits, traffic, and other metrics differently. Even Google, when we compare their products like CM and DV360, they have different algorithms to count impressions, clicks, that’s why we often see differences in that system for the same campaign.

So there is no surprise that almost every week, I’m investigating problems in the discrepences between Google campaigns and campaign reports in Adobe Analytics, which follows a different logic.

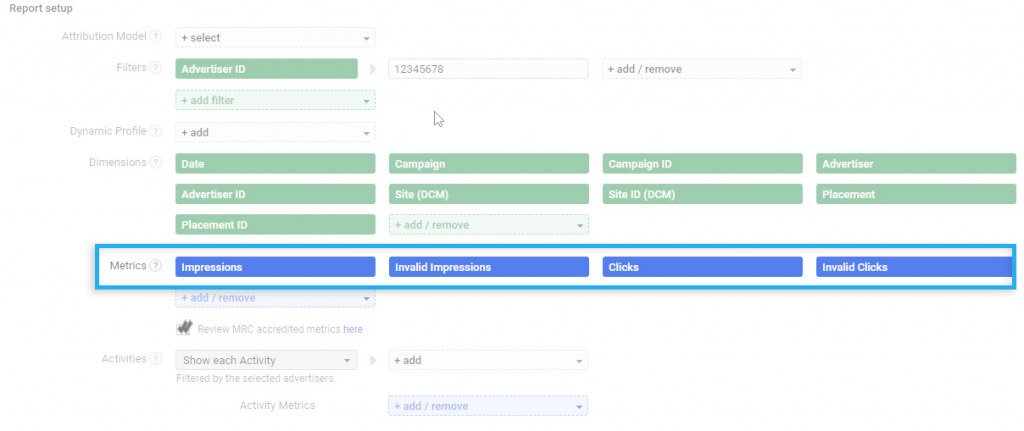

Google has to kinds of invalid traffic, SIVT, and the GIVT. Let’s take 100 clicks from our ads. 20 of them are invalid traffic (SIVT + GIVT), so Google count only 80 clicks valid clicks.

On the website, Adobe Analytics (In the perfect case scenario) counts 100 unique visits, but Google Floodlights just 80 of them. Floodlight will fire but it will be unattributed to our campaign.

I’d recommend adding a campaign or any other tracking identifier from the landing page URL to the Floodlight U-variable. And then compare the generate Google Floodlight report with unattributed data.

Base on the created new u-variable, you can estimate real visit count from the campaign that could compare to the Adobe Analytics.

It is not possible to predict what share if the clicks/visits might be invalid. It depends on the campaign itself, on which device to which environment to which audience, by which ad exchange server.

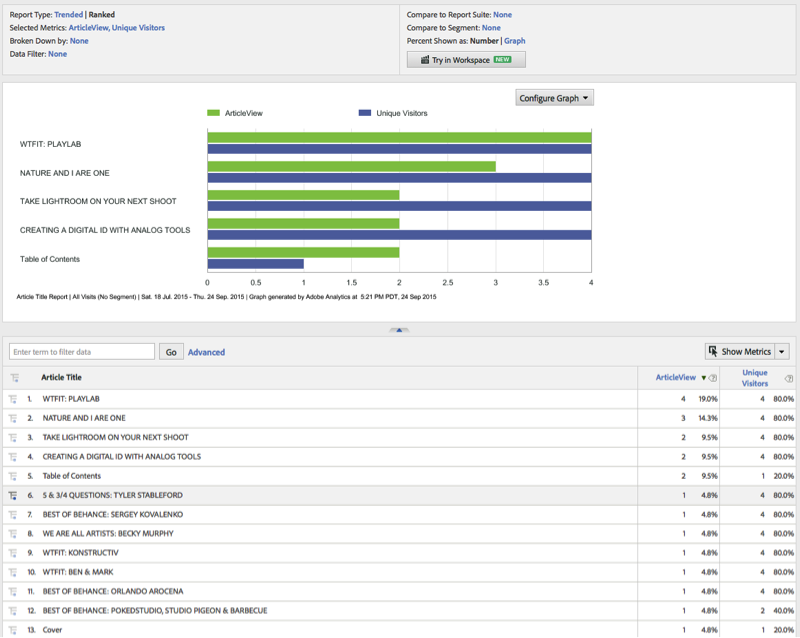

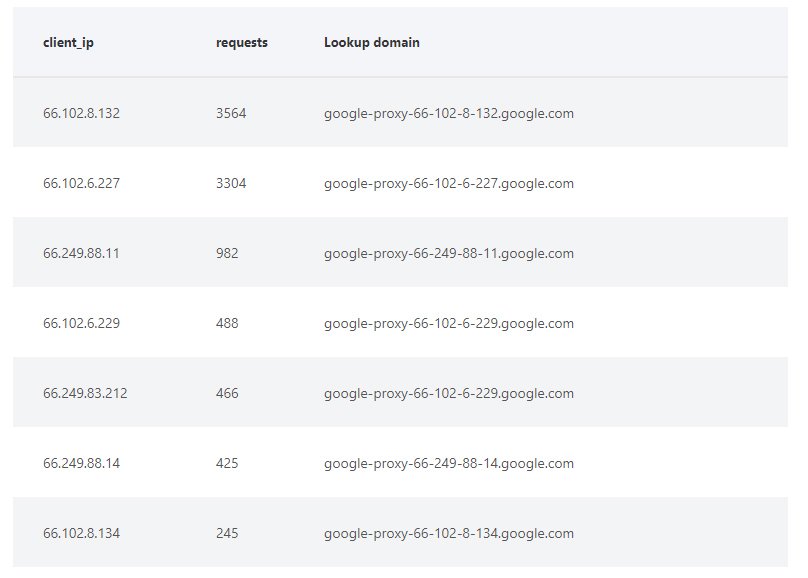

It is quite an exciting topic I’ve recently discovered. Google Display & Video 360 has its ads & landing pages validation tool, which through Google Proxy servers is visiting the website, where Adobe Analytics is counting visit, but Google Floodlight and other Google products not.

It means that Adobe Analytics is counting test visits. Depends how big the campaign we have, sometimes it could result in 2-4% of all visits, which is still a very significant number.

The only way is to ask your IT engineer to provide your public IP addresses or a special HTTP header of the website visitors, so you could distinguish within Adobe Analytics if the traffic comes from Google Proxies or not.

Then you could build a user segment that you could analyze how many of the campaign visits belongs to Google software. It would be definitely to improve quality and reduce discrepancies while comparing visits with Google Floodlight reports.

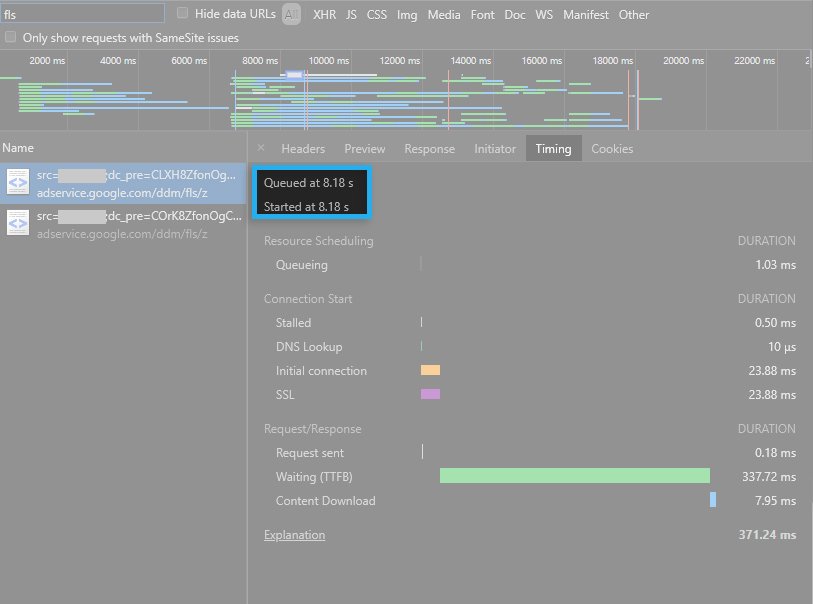

Another common problem is delaying the firing of the Google Floodlights while the page is loading. The idea is to reduce the time that page requires to be interactive to the user, which is a good thing. But if the website weighs 5-15MB and contains 200-500 different requests, it can not be quick, whatever we do with the Floodlights.

In general, we need to answer the big questions. Why do we implement our tracking pixel, and do we need the other 50-100 pixels firing on the same page?

But if we assume our website is not from the “hardcode” category, and in our campaign split between desktop and mobile is 50/50. The difference between Adobe Analytics firing very early while the page is loading and our Google Floodlight firing with a delay on the desktop could be around 1-3s, which could cause discrepences around 5-10%. On the mobile device with the 4G cellular network, the difference could rise to 3-7s, which usually makes a difference in the reports around 15-25%.

If we switched Google Floodlight to fire while the page is loading (on load), it would fire 0.5-2s after Adobe Analytics so that discrepences can decline to around 2-5%. On mobile devices, the time difference should be around 1.5-4s, so discrepences should be around 5-10%.

Note

Presented times and % discrepences are based on my everyday experience and the observations of the campaigns I’m monitoring. So it does not mean you will get similar results, but my idea here was to show the discrepancy relation between desktop and mobile.

I don’t recommend to delay firing any pixel. Because if we put such a pixel on our website, it has a real business value to us, so it would be unwise to make it count in an inaccurate way by default. It is like drinking warm beer, yes you can, but it does not have any sense.

I hope this article helps you understand the most common problems. If you have any questions, please write in the comment section below.

Subscribe to receive updates about new articles.

Google Campaign Manager has multiple options and settings. But there is one fascinating one. With this option, you will stop using Cookies for your campaigns.

The most difficult part of advertising is reporting. Manually generated reports take a lot of time, and manually combining data in Excel does not help with this task. However,...